Depending on who you talk to, the cloud is either (A) the future of business (and personal) computing, (B) a ruse by providers to get everybody stuck paying ever-increasing rates, (C) an epidemic of security breaches waiting to happen, or (D) an overhyped development that will lead to some interesting technological advancements but won’t ultimately be all that earthshattering. So what is a business leader to do when faced with the decision of whether to move to the cloud? Naturally, it falls to the CIO to somehow square the circle and make a wholescale move to the cloud while guaranteeing lower costs, perfect security, and unlimited accessibility. Before your business takes on this endeavor, though, there are a few things about the cloud your CIO would probably like to clear up.

If your applications are only a couple of years old, you probably won’t have much difficulty moving them into the cloud. And of course infrastructure services like Azure are a great place to build and test new applications. But some legacy apps might be better left in the environments where they’re currently hosted. This is why many companies set up hybrid environments—getting the best of both the on-site and cloud worlds (or, the private and public cloud worlds, depending on how you want to phrase it). You may for instance benefit most from using Office 365 for collaboration and document sharing while hosting Active Directory on-site. Even if you do end up moving your entire business into the cloud, you should think of it as a transition over time, not any type of migration that can or should be accomplished overnight.

It seems like every day there’s a story in the news about some kind of data hack: Target and other retailers; the Heartbleed Bug; Jennifer Lawrence. The catch-22 is that businesses feel they need to move to the cloud to remain competitive but by doing so they’re opening themselves up to myriad security risks. Here’s the thing to keep in mind: the Target and Heartbleed issues weren’t really about the cloud per se—they were about the internet in general. Any data that is accessible online is potentially susceptible to hacking. Not many businesses can operate completely offline. The celebrities having their iCloud photos stolen likewise had nothing to do with any vulnerability inherent to the cloud. Instead, it was a matter of hackers figuring out passwords and the answers to security questions. (Though Apple really was remiss in not setting limits to password attempts—a common industry practice that would have made the theft impossible.)

With on-site servers, you have to worry about fires, floods, earthquakes, power outages, etc. With cloud services, it’s blind subpoenas—which even in the extremely unlikely event that they’re issued won’t necessarily spell disaster for your business. The only other thing that might make cloud datacenters more risky is that they house information from many businesses, much like a bank has many people’s money, which makes them a more attractive target. But, also like a bank, cloud providers have a lot more resources to devote to security. There are, however, many aspects of security that you can take responsibility for yourself—like setting multifactor authentication standards for passwords, and creating BYOD policies.

The cloud offers some pretty amazing capabilities, and it opens the way for countless untold advances in the future. But, before moving into the cloud, you should have a well worked-out idea of what you hope to achieve by doing so. Are you looking for more flexible mobile access? Do you need your server capacity to be highly scalable? Or are you looking for more seamless integration between your various software services? To know if the cloud is right for you, and to be able to tell if the cloud is working for you, you need to first have some goals in place.

In a lot of circumstances, the cloud can save you money. In some, it may be more secure. Like any other business decision, though, the choice of whether to move to the cloud starts with understanding where you are now and having a good idea where you want to go. This is important to point out because there’s a perception out there that everyone should flip the magical switch that moves everything to the cloud all at once, making it all cheaper and more efficient, and opening the way to all kinds of new developments over the horizon.

The bottom line is your move to the cloud should begin with a lot of planning. You need to know not just what milestones you hope to reach but what steps you’ll take to get there from where you are today. The CIO’s job is to help you work out this strategy and then to see that it gets successfully implemented. But someone also needs to make a point of focusing on the business side of the equation, asking questions like how will this change impact our organization day-to-day, how much down time should we anticipate, and how does this move position us with regard to future transitions and upgrades? Knowing what the cloud can and can’t do, and separating the real from the imagined risks, is a good first step.

If you have any questions about cloud services or are interested in obtaining a quote for cloud and hybrid cloud solutions, please contact QWERTY Concepts at 877-793-7891 or visit the cloud computing web page.

View the original article here

When a database must stay tucked away in an enterprise data center, running a dependent service in the public clouds spells "latency." Or does it?

Most hybrid architectures propose moving only certain application building blocks to the cloud. A prime example is a Web front-end on Amazon Web Services and a back-end transaction server in an on-premises data center. But a new wave of hybrid thought says it's OK to separate data from applications, even to allow multiple applications to run from multiple cloud provider sites against a common data source that can be located in a company's data center.

Not so long ago (like yesterday) that suggestion might, at a minimum, earn you an eye roll and a dismissive hand wave. In more extreme cases, we're talking a recommendation for an IT straightjacket. Separating data from applications and putting half in the cloud would be nothing short of insane. The latency and security problems will kill you, right?

Not necessarily. Separating data services from application services is nothing new; we do it all the time in data centers today. However, we typically provide high-speed links between the two to avoid latency, and, since it is usually all locked in a secure data center, security is less of an issue. Some organizations actually split services between two data centers, but in the process have built the dedicated network bandwidth necessary to support the traffic and ensure adequate performance. In that situation, security is managed by keeping it all behind the firewall, limiting access, and encrypting data in motion.

I equate the first situation to having a private conversation with my wife while we're in the same room. The second is having the same conversation, but now we're yelling between rooms within our home (more likely this is the model for a conversation with my teenage daughter). In this situation, the communications may not be ideal, and the risk someone else may hear is higher, but it's still a somewhat controlled environment.

Complex or not, hybrid cloud is popular in enterprises.

Complex or not, hybrid cloud is popular in enterprises.

When it comes to moving application services to the cloud and leaving the data in the data center, some people would claim an appropriate analogy is using a bullhorn to have a conversation with your teenager while you are home and she is at the mall.

New technologies and cost models, however, offer an alternative to connecting services over the public Internet. One contributing factor is the ability to get cost-effective, high-speed network connections from cloud provider sites to data centers, of sufficient quality to minimize latency and guarantee appropriate performance. In addition to services offered directly by hosting vendors, colocation companies are jumping into the fray to offer high-speed connections between their colo data centers, as well as from these locations directly to some cloud provider sites.

[Hybrid Security: New Tactics Required. Interested in shuttling workloads between public and private cloud? Better make sure it's worth doing, because hybrid means rethinking how you manage compliance, identity, connectivity, and more.]

For enterprise IT, there are three things to consider when deciding whether this model is for you and considering options for high-speed network connections.

1. Security: While data may have traveled only behind the firewall in the past, now it is stepping out over a network, potentially among multiple cloud locations. Keeping this information safe in transit and dealing with access-control issues will be critical, as discussed in depth in this recent InformationWeek Tech Digest. Heck, in a post-Snowden world, the business may even care. Settling compliance issues, such as encrypting data before it goes over the wire and making sure data doesn't travel outside the appropriate geographic borders, is also critical before you provision any new connections.

2. Network bandwidth and performance: Depending on the application's sensitivity to latency, the cost to guarantee a given performance level over the network may eliminate any savings gained in moving the application to the cloud. My advice: Know exactly what you're getting before committing. SLAs need to be clear, as do penalties, and make sure you have monitoring tools to validate performance. It's particularly important to look not just at the network specs but the real-world, end-to-end performance. Benchmark over a period of time before moving the application to gain a base model. Run simulated transactions and workloads based on the benchmark; that will give you a sense of equivalent performance in the new environment. Recognize as well that if you are going into a public cloud, your mileage may vary on any given day. This is discussed in depth in the 2014 Next-Gen WAN report.

3. Business continuity/disaster recovery: The number of things that could go wrong just increased exponentially. Meanwhile, managing recovery plans becomes more complex. Map disaster scenarios prior to getting locked in, and budget for redundancy. Cable cuts and floods happen. Be prepared at the onset, rather than scrambling along with everyone else affected if a network connection is impacted. Recognize as well that you now have two different environments that may require two different plans for DR and have different recovery times. Of the three, this will likely be the most complex to work out.

While I've focused on the challenges, there are clearly benefits to be had if you need the scalability of a cloud environment for your applications. But just because we can doesn't mean we should. Much like raising a teenagers, each scenario is different, and you need to be up for the challenge before you head down that road.

View the original article here

With proper preparation, cloud-based disaster recovery will enable your organization to weather the season of storms and power outages.

The season of power outages has arrived. We can expect coastal tropical storms and hurricanes and Midwest twisters and tornadoes to bring us a season of outages and, unfortunately, lots of loss. Government agencies, if not properly prepared, will see applications and data centers swept away with the same speed and suddenness of the wild weather winds -- even with so much advanced technology and outstanding preemptive tools and systems available.

It was only two years ago when Hurricane Sandy hit the East Coast, knocking out data centers from Virginia to New York to New Jersey. They lost public power and went dark for days, causing vulnerability and leaving lots of important information unavailable.

For government agencies that use their own internal data centers to house applications, public multi-tenant clouds offer a lower-cost, easy-to-deploy disaster recovery/continuation of operations (DR/COOP) solution. The steps below can help data centers plan and execute effectively with minimal to no disruption in the production environment.

1. Know your mission-critical applications. Determine which of your Web-based applications cannot go down for even a short (or extended) period of time. Identify these applications along with their dependencies and minimal hardware requirements to operate. Document your findings as these will become part of your DR/COOP plan and will help you when you move on to step two.

2. Choose a compliant cloud service provider or give a checklist to the one you have. Identify the right cloud service provider (CSP) that can support your business and technical requirements. If possible, choose a CSP that uses the same hypervisor that you use in-house. This will make mirroring a lot easier, faster, and cheaper in the long run.

3. Configure remote mirrored virtual machines. Depending on the hypervisor you currently are using for virtualization, either set up the data center to automatically mirror these virtual machines (VMs), or arrange to manually set up the remote VMs. Either way, make sure there is a mirrored VM for each production system that needs emergency backup.

4. Set up the failover to be more than just DNS. With the mirrored VMs tested and in place, it's time to select a technology that will handle the failover if and when a disaster occurs. When selecting this technology, avoid one that depends on a change to Internet domain name system records. While a DNS change will work, in most cases there will be a downtime of many hours or possibly even more than a day before users can reach the DR/COOP site. Therefore, seek a technology that can detect a failure in your primary data center and redirect end users instantly to the DR/COOP solution.

5. Perform regular failover tests. With the above steps complete, the final step is performing the end-to-end failover test, which must be routinely tested with the DR/COOP site. Depending on internal policies, this test may be as small as one application's individual failover, or you may wish to schedule a full site failover. Whichever is done, it is important to document the process, the steps taken when performing the test, and a clear record of results after each test is done. If your failover plan did not work, refer back to your documentation, identify what did not work as expected, make the adjustments to your plan (and documentation), and test again. You may need to do this multiple times until you have a bulletproof failover plan.

While we can't control Mother Nature, we can control our preemptive strikes against data disasters. A single emergency can take down a data center, but it only takes a simple plan and proper preparation to prevent disaster. Whether you bring the expertise in-house or outsource it, make the time and budget available to properly plan so you are not out of luck during the outages.

Most IT teams have their conventional databases covered in terms of security and business continuity. But as we enter the era of big data, Hadoop, and NoSQL, protection schemes need to evolve. In fact, big data could drive the next big security strategy shift. Get the 6 Tools To Protect Big Data report today (registration required).

Consumer-class cloud services force IT to get aggressive with endpoint control or accept that sensitive data will be in the wind -- or take a new approach, such as reconsidering virtual desktops. Is VDI poised to bust out of niche status? For years, virtual desktops have been largely limited to spot deployments.

End-users don't like VDI for a variety of (quite legitimate) reasons, not least connectivity and customization limitations. For IT, it burns through CPU cycles, storage, and bandwidth. It takes effort to set up a logical set of images and roles and stick to them. OS and software licensing can be a nightmare. And so on. But now, cloud- and mobility-driven security concerns plus some key technology and cost-avoidance advances mean it's time to take a fresh look.

Public cloud services such as Dropbox, Google Drive, and Hightail pose a thorny problem: How can IT effectively control regulated and sensitive data when each device with an Internet connection is a possible point of compromise? Improvements in policy-driven firewalls and UTM appliances help, but BYOD initiatives make enforcing controls nearly impossible.

Meanwhile, advances in solid state storage and plummeting thin client prices equal lower deployment costs, especially in greenfield scenarios. Couple VDI with advances in network virtualization and virtual machine administration, particularly on VMware-based VDI deployments, and IT can achieve fine-grained control of network connections and desktop configurations.

Finally, new Linux-based VDI approaches and open-source hypervisors offer an ultra-low-cost option for organizations with the right skill sets and application needs.

Virtualized desktops are also an increasingly attractive alternative to terminal-based application delivery methods, including Microsoft RDS and Citrix XenApp. Decision points on whether to switch include the fact that VDI offers complete desktops with significantly better resource encapsulation and session isolation. While Windows and Linux session-based application-serving technologies can sandbox resources to an extent, their resource isolation is incomplete compared with what today's hypervisors provide. That's important because application servers are vulnerable to performance degradation in the face of high resource demand, whether by users or underlying OS configuration or maintenance issues. In comparison, virtual desktop infrastructures are much less vulnerable to resource strangleholds and configuration flaws. Yes, they require more effort and expertise to maintain and cost more up front -- though not as much as you might expect.

Prices plummet

Many a VDI feasibility study has been derailed by costs associated with the storage architecture required to provision and sustain a pool of virtual desktops. Storage bottlenecks have been the historical bane of VDI, with poorly specified, undersized, I/O-limited infrastructures largely responsible for poor performance and long wait times to redeploy desktop pools with configuration changes (cue the end-user hatefest).

Major advances in solid state storage go a long way toward mitigating both the cost and performance impact of storage on VDI deployments. Enterprise virtualized storage systems and software-defined storage architectures, such as DataCore SANsymphony and VMware Virtual SAN, incorporate SSD and spinning disk storage into high-performance tiered architectures that intelligently place often-accessed data on SSD and provide cache services to spinning disks. Ben Goodman, VMware's lead technical evangelist for end-user experience, goes as far as to assert that Virtual SAN can save 25% to 30% over a typical virtual desktop deployment via reduced storage costs, a number we consider feasible with the right setup.

Regardless of your SAN or storage architecture, today's mixed solid-state/spinning-disk volumes are about half as expensive as the same I/O characteristics in pure spinning disk configurations, and IT is taking notice. More than half of the respondents to our 2014 State of Enterprise Storage Survey say automatic tiering of storage is in either pilot (22%) or limited (18%) or widespread (14%) use in their organizations. Further, that same survey showed healthy growth in the use of SSDs in disk arrays, from 32% in 2013 to 40% in 2014, highlighting the growth as falling prices bring SSDs in reach of most shops.

View the original article here

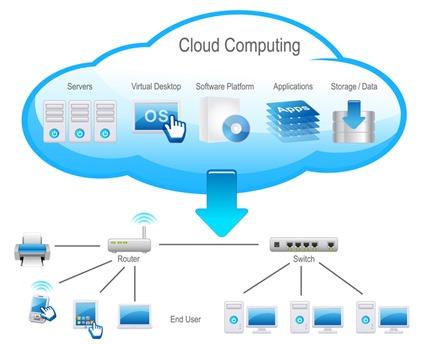

Firstly, what does this term mean? The word “cloud” relates to the cloud computing representation used historically to illustrate the telephone network and more recently to represent the Internet in computer diagrams. So, it’s basically a metaphor for the Internet.

Firstly, what does this term mean? The word “cloud” relates to the cloud computing representation used historically to illustrate the telephone network and more recently to represent the Internet in computer diagrams. So, it’s basically a metaphor for the Internet.

Overall, cloud computing is a rather an ambiguous term that takes in a number of technologies that all deliver computing as a service instead of a product.

Investopedia’s definition makes reference to ‘a cloud computing structure allowing access to information, provided an electronic device has access to the web’

If you have used Web e-mail systems such as Hotmail, Yahoo! Mail or Gmail, then you have used a form of cloud computing. Here the user logs into their web e-mail account remotely via a browser rather than running an e-mail program locally on their computer. The mail software and storage resides on the service provider’s computer cloud and does not exist on the user’s machine. This is a good example of cloud computing application as shared resources such as software and information are delivered as a utility via a network, usually the Internet. This, in turn, is analogous to electricity delivered as a metered service over the grid. Users machines can be computers or other devices such as smartphones, dumb terminals etc.

This brings up 2 major terms required to fully appreciate the world of “the cloud”, firstly utility computing which relates to the mechanics of delivering resources typically computation and storage are delivered as a metered service; again analogous to other utilities such as electricity. The second of these terms is autonomic computing which describes how systems are self-managing; the term self-healing has been applied but great care needs to be taken with that term!

Cloud based applications are accessed through a web browser or lightweight desktop/mobile app with the data and business software being held on servers at a remote location, usually a data center. Cloud application providers (ASPs = application Service Providers) aim to deliver the same or better service and performance versus applications running on end-user computers locally.

Cloud computing applications continue to be adopted quickly with uptake driven by the availability of high bandwidth capacity networks (importantly down to the end user/client level by high bandwidth broadband or other internet connections), low cost high availability disk storage, inexpensive computers plus large scale adoption of both visualization and IT architecture designed for the service model.

There are three basic models of cloud computing:

IaaS is simplest and each higher model builds on features of the lower models.

The most basic cloud service model; cloud providers offer computers as either physical or virtual machines along with access to networks, storage, firewalls and load balancers. Under IaaS these are delivered on demand from large pools installed in data centres with local area networks and IP addresses being part of the offering. For wide area connectivity, the Internet or dedicated virtual private networks are configured and used.

Cloud users; here defined as those who wish to deploy the application rather than necessarily use it, install operating system images on the machines plus their application software to deploy their applications. Under IaaS, said cloud user is responsible for patching and maintaining the OS plus application software. IaaS services are usually billed on a utility basis, so cost reflects resources allocated and consumed.

Platform as a Service (PaaS) model, the cloud provider typically delivers a computing platform consisting of OS, database, and web server. This enables application developers to run their software solutions on this cloud platform without needing to buy or manage the underlying hardware and software, so eliminating cost and complexity. In some PaaS offerings, computing and storage resources automatically scale to match application demand eliminating complexity further as the cloud user does not have to allocate resources manually.

Under the Software as a Service (SaaS) model cloud providers install and operate application software in their cloud with users accessing the software using cloud clients. A significant benefit is that maintenance and support are very much simplified as users do not manage the cloud infrastructure or the platform running the application and do not need to install or run the application on their own computers. Another major advantage of such a cloud application lies in its elasticity achieved by cloning tasks on to multiple virtual machines as demand requires it. Load balancers distribute this work over the virtual machines and the whole process is transparent to the user who sees simply a single access point. Cloud applications may be multi-tenant, so any machine serves more than one cloud user organization. This illustrates another major advantage of cloud computing as is it can quickly accommodate large numbers of new users or users can be quickly dropped as usage demands which again is reflected in the pay per user model. These types of cloud based application software are commonly referred to as desktop as a service, business process as a service, Test Environment as a Service or communication as a service.

The pricing model for SaaS applications is typically either a monthly or yearly flat fee per user. If you are looking for a cloud service provider, browse qwertycstaging.wpengine.com.